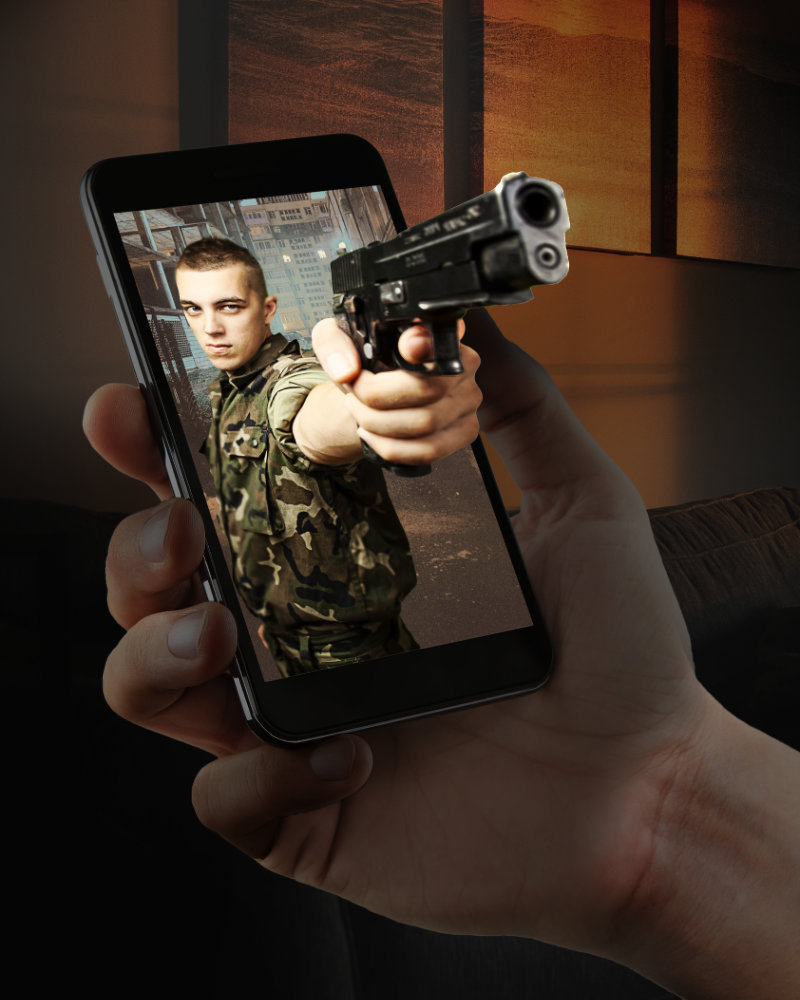

Much has been written to debunk the thesis, popular among gun-rights advocates, that “only a good guy with a gun can stop a bad guy with a gun.” The refutations tend to rely on statistics showing that increased gun ownership leads to increases in rates of violence, or that the instances in which civilians are able to use guns to stop crimes are vanishingly rare. But there’s one simple, common-sense argument that I’ve never seen put forward: Practically speaking, there is no difference between a “good guy” and a “bad guy.”

Given a collection of random people, would you be able to sort them into two groups, one good and one bad? Of course not — there is no real-world marker of goodness or badness. Clearly, then, we can’t expect a bullet to know whether it’s been fired by a good guy’s gun or a bad guy’s gun. It’s just going to go wherever the gun is pointed.

Therefore, it’s meaningless to say that “a good guy with a gun can stop a bad guy with a gun.” All we can say is that one person with a gun can stop another person with a gun. It may be that one is a more skilled marksman than the other, or that one is luckier than the other, but which individual is “good” and which is “bad” doesn’t enter into it.

How, then, can we make it more likely that in any violent confrontation, the right person will prevail? Well, one possibility is that we, through our democratically elected government, could agree on who we want to represent our collective definition of goodness, and allow those people — and only those people — to have guns. We could train them to use their guns conscientiously and safely. We could make them responsible for protecting the rest of us (who are unarmed) against anyone who attempts to do us harm. We could make them easily identifiable as “good guys” by giving them uniforms… maybe even badges. (OK, you see where I’m going with this.)

Unfortunately, this proposal can only work if it’s built on a foundation of trust, and trust is in short supply. There are plenty of people who say, “I’m not going to put my safety in the hands of a government whose interests might be contrary to mine. The only person I can trust is myself. I refuse to relinquish the weapons that would allow me to defend myself against those who wish to hurt me — possibly including the government itself.”

Now, here’s where it gets interesting, because this is the point where I’d feel the urge to take sides: I’d want to say, “Yes, but in taking that position, you’re putting all of us in danger. If everyone has equal access to guns, there’s no guarantee that the ‘good’ person will defeat the ‘bad’ person in any given conflict — the outcome is just as likely to be the opposite.”

A thoughtful gun-rights advocate might then respond, “I acknowledge that if everyone has the right to use a gun, some people are going to die unnecessarily. But if that’s what’s necessary to preserve our absolute right to self-defense, I’m willing to make that tradeoff.”

And there lies the problem: Every policy decision involves a tradeoff. The difference between the two sides in an argument usually comes down to what each side is willing to trade away in exchange for something they consider more valuable.

This may seem like a weird change of topic, but consider the debate over encryption. Several digital communication companies protect their users’ privacy by providing end-to-end encryption — meaning that if I send you a message, it will be transmitted in a highly secure format that can be read only on your device. End-to-end encryption enrages law enforcement authorities, who have long been able to listen in on phone conversations and depend on being able to do the same with digital communications. In order to stop terrorist acts before they happen, they say, they must have a way to find out what potential terrorists are saying to each other. But companies such as Apple tell them, “We’re sorry, but messages sent via iMessage are so secure that even we can’t read them.”

The U.S. government has demanded that Apple build a back door into its communications software that would allow law enforcement to read encrypted messages in cases of potential threats to national security. Apple has refused, and privacy-rights advocates — myself among them — support Apple’s stance. The government, they say, could abuse its access by illegally tapping into conversations that have nothing to do with national security, and then using the gathered information for its own ends. To protect themselves from government agents who can’t be trusted, people should have the right to communicate privately.

Someone could easily say to a privacy-rights advocate, “But in taking that position, you’re putting us all in danger. You’re making it more difficult for law enforcement to predict and prevent terrorist acts.”

And I, as a privacy-rights advocate, would have to respond, “if that’s what’s necessary to preserve our absolute right to privacy, I’m willing to make that tradeoff.”

In other words, there’s no such thing as a “good guy with an argument” and a “bad guy with an argument.” Both sides are using the same argument, but we’re just filling in the blanks differently. In the absence of a perfect solution to a problem — which almost never exists — each of us has no choice but to weigh one potential outcome against another, using our own values as a guide.

Recent Comments